=Microphone Array=

Objective

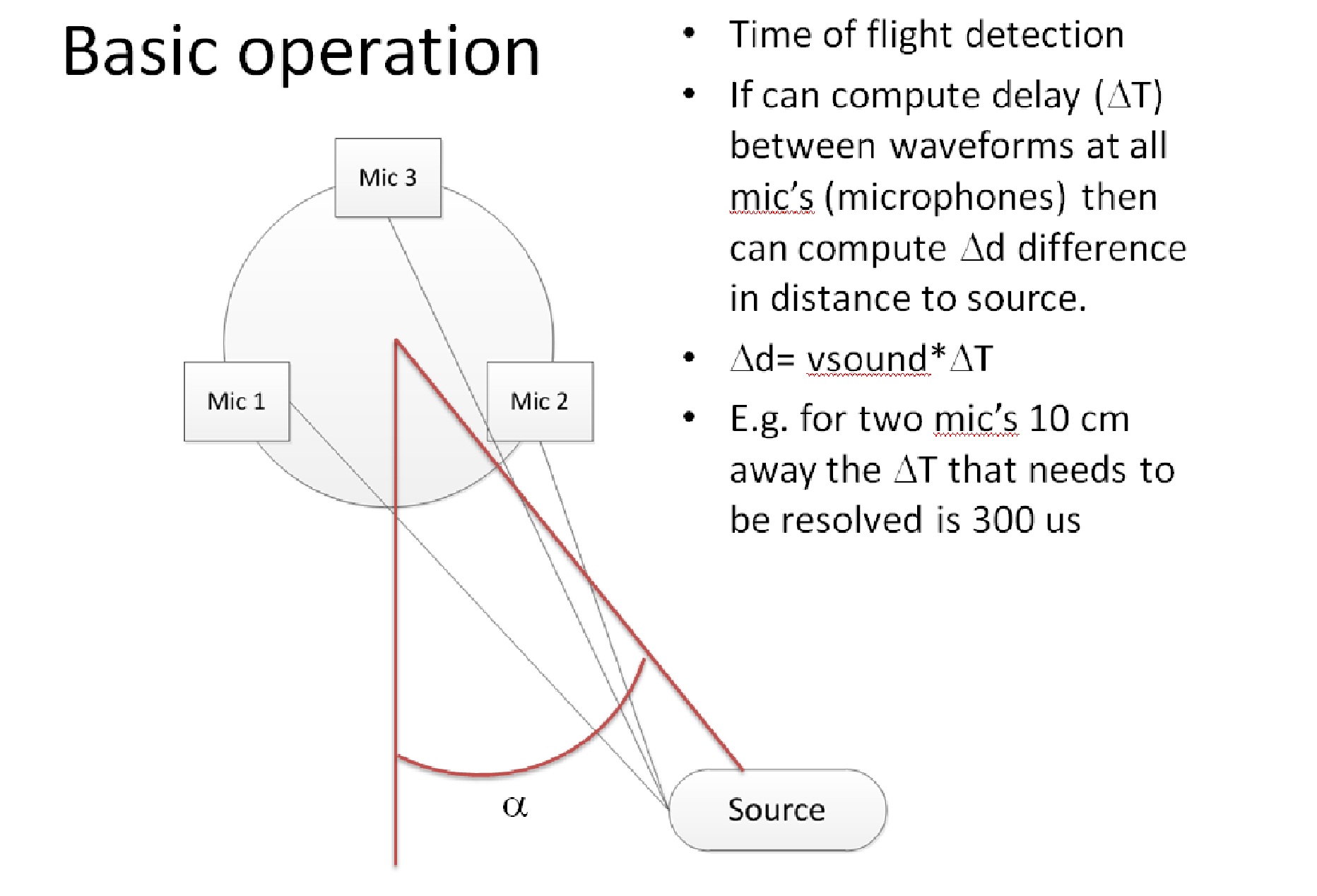

Build a processing platform for microphone array data processing. First objective is to enable computing direction of a sound source. This is done simply by analyzing waveforms acquired from different microphones. Correlation may be used between any pair of microphones in the array to detect the delay between the waveform and hence compute the delta in distance to the source and from there the probable direction of the source with respect to the array

Features

Initial

- Simple terminal interface to interface

- State Machine with 3 basic states

- Armed and waiting for a trigger. Analog Input on timer interrupt. Acquire data into circular buffer

- Triggered: Continue acquisition and stop once full circular buffer has gone thru one full cycle

- Processing: Interrupt is turned off, compute correlation between waveforms (currently implemented 2 mic’s only)

- Correlation computed to detect delay: first implementation is straightforward multiplication of arrays(N*delayRange),

Future

- FFT implementation in the future should cut processing time (N*log2N) by 6x or so

- Support multiple microphone arrays

Requirements

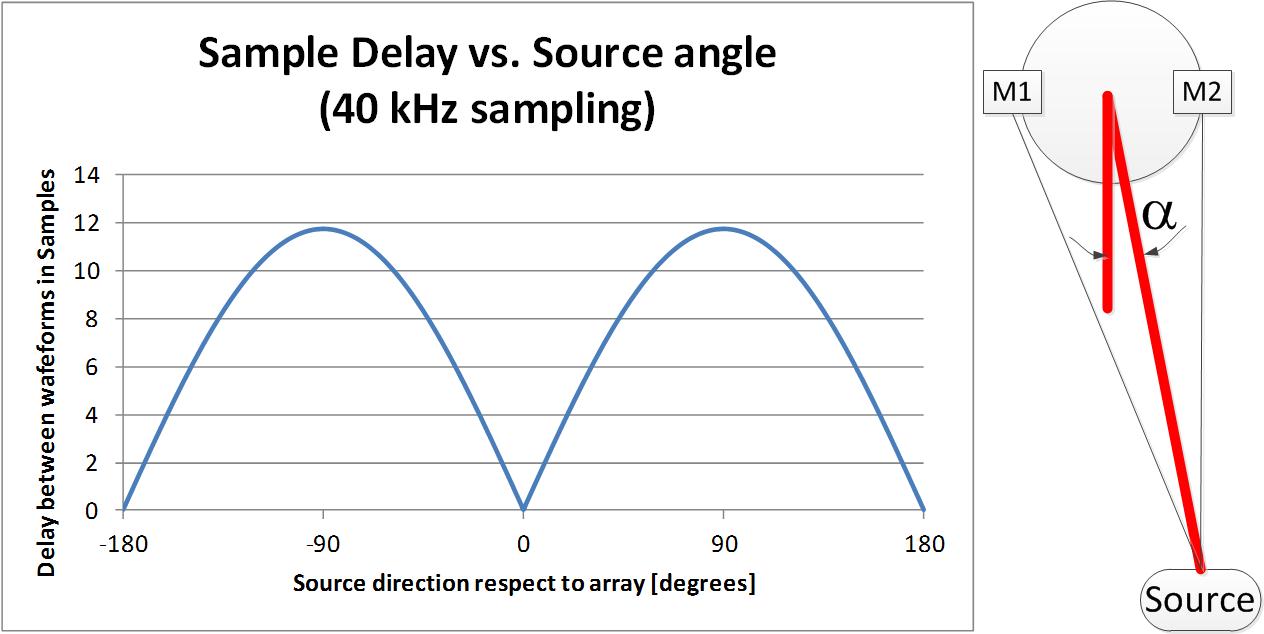

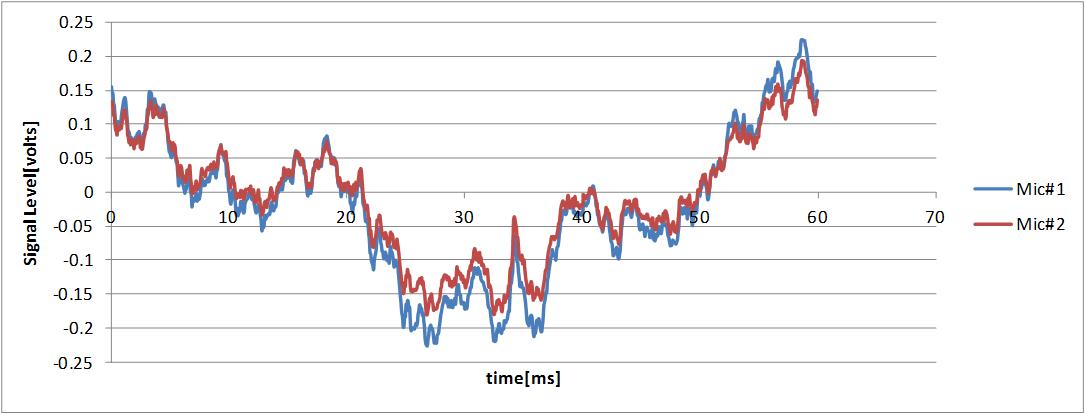

- Angular resolution will depend on the specific application. 10 degrees of resolution is a good starting value. This translates into a sampling frequency of 40 kHz for two microphones 10 cm away. This is depicted in the plot above showing the delay between the waveforms from two microphones in number of samples.

- Storage of data buffers is required. The amount of storage depends on the number of microphones and the required overall sample time. The minimum sampled time depends on the lower range of frequencies that need to be detected. For example for speech events this minimum may be set at 100 Hz (http://physics.info/sound/). So for 2 microphones, gathering 16 bit integer data the memory required is 8 kB (assuming 5 full periods are acquired for the min frequency)

- Processing time is a hard real time constraint for this system. If the system is to perform

System Diagram

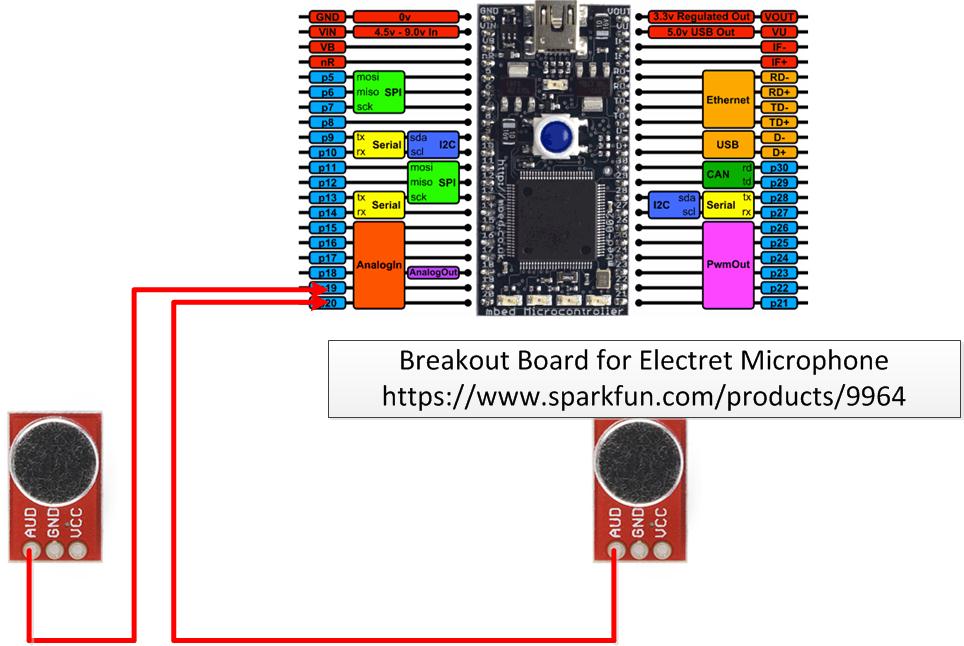

- I use two sparkFun electret microphone breakout boards from sparkfun https://www.sparkfun.com/products/9964

These are nice as the microphone output is pre-amplified, scaled to the supplied power (I run it from the MBED 3.3 V)

and AC coupled.

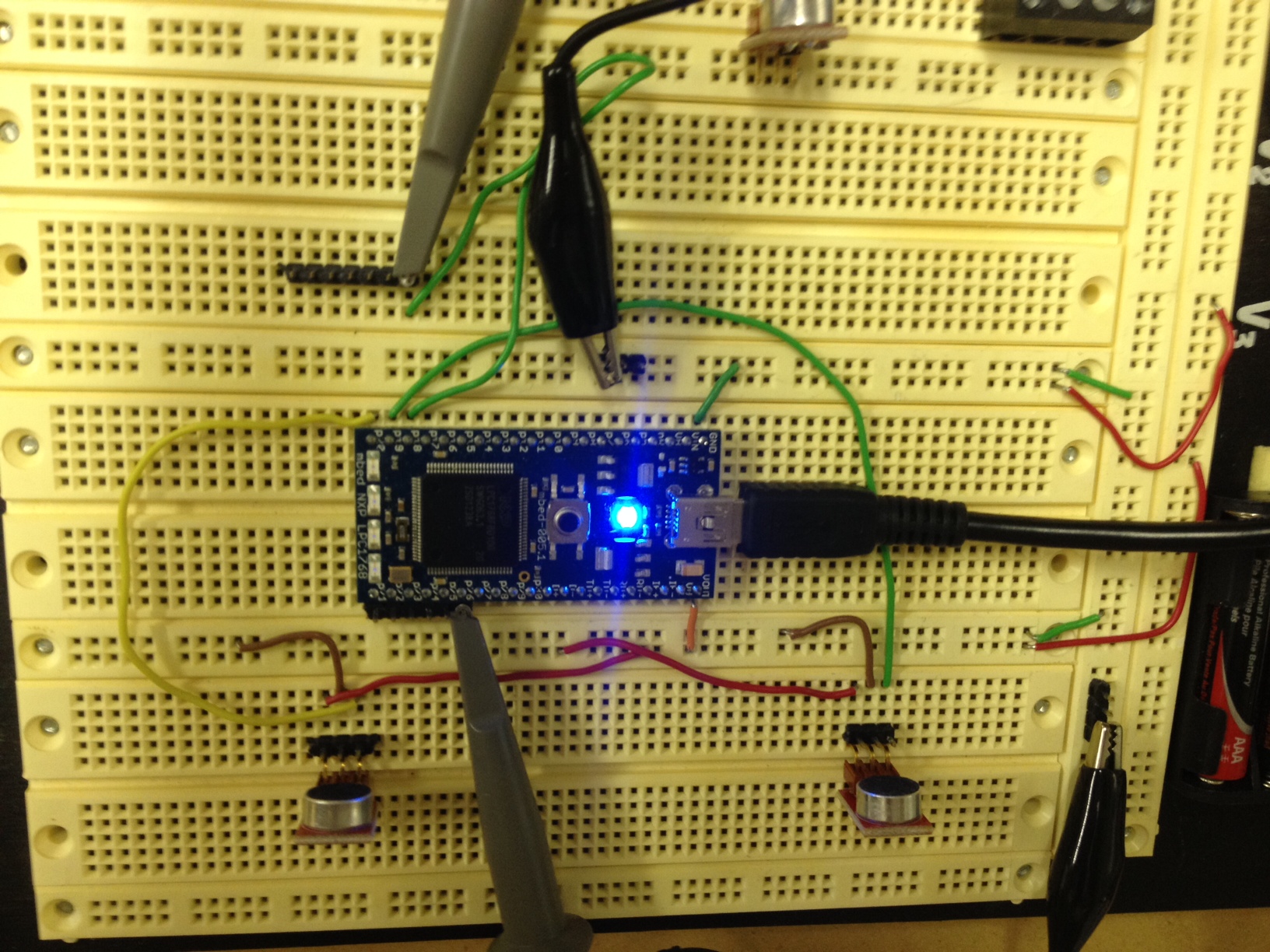

- The system is mounted on a breadboard. For debugging purposes I send several digital signals out (ISR start/end) as

well as an analog signal out which mirrors the microphone input read into the analog inputs.

Current Implementation

- State machine with 4 states: Acquiring, Trigerred, Processing,Idle

- Serial Terminal Thread with simple one-character commands

- Ticker interrupt for periodic sampling of microphones

- Thread waiting for acquired data signal to verify trigger

- Processing Thread to compute correlation after signal trigger has occurred. Currently acquisition is stopped until processing is completed

Testing and Status 3/25/14

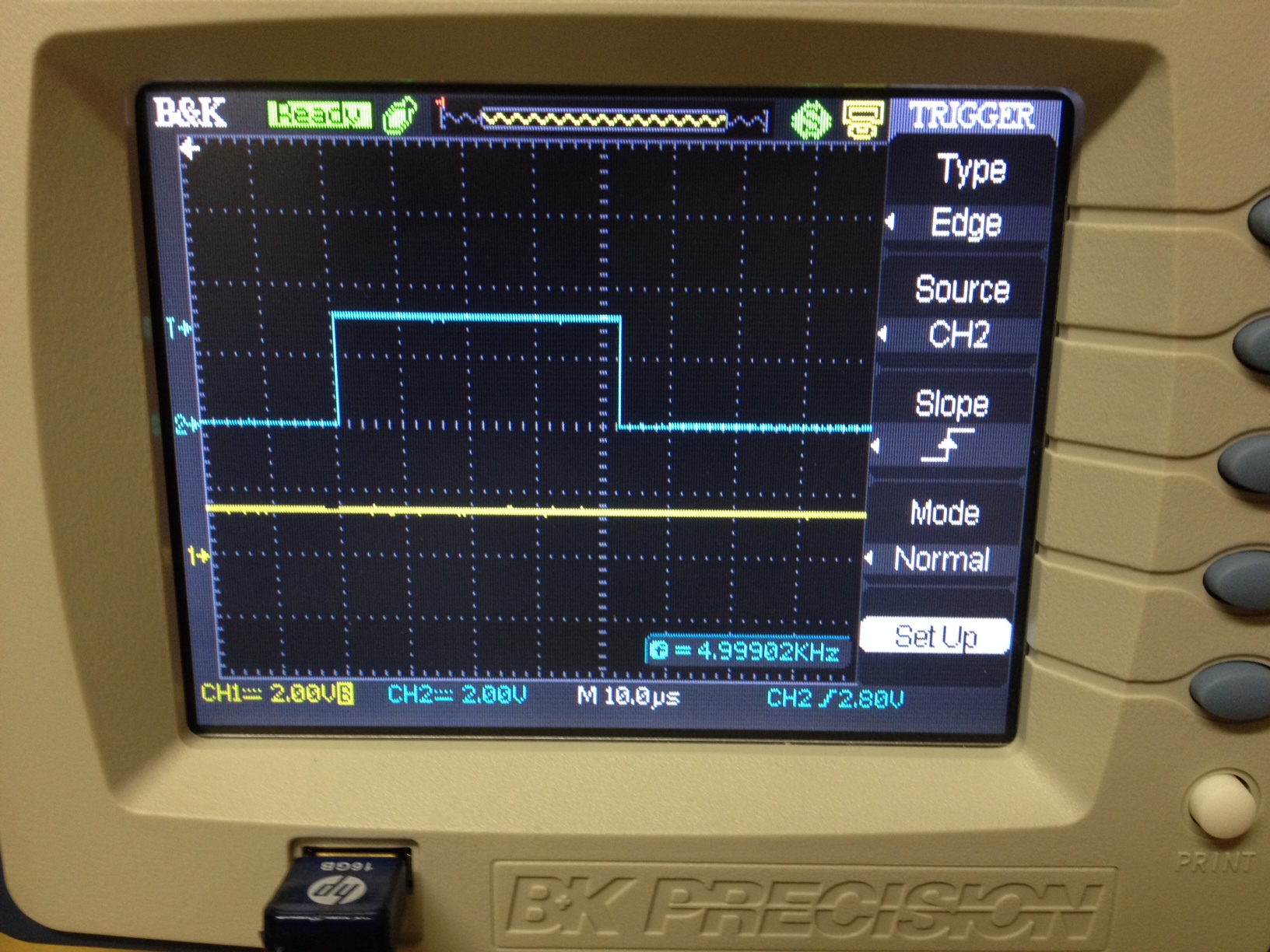

- Experimentation showed that calls to the analogIn library read_u16() took about 21us, so two calls extended the interrupt routine

to about 42 us(shown in the screenshot below from a scope), limiting the currently achievable sampling rate to 12.5 kHz for two microphones. A future implementation needs to solve this by bypassing this library and accessing the proper LPC setup registers.

- There is an issue with the trigger implementation which means by the time I acquired the data most events have finished and the

program is processing mostly noise. Because of this currently the source direction is not being detected correctly. Acquired data

is shown below. Note that the trigger level is set at about +/-0.8 V (ok, actual analog input is 0-3.3 mv, for processing I offset it by the equivalent of 1.65 mV, so the trigger checks offset compensated signals being >0.8 V and <-0.8). Processing is all done in uint16_t type for efficient multiplications while the shown data spans only about -0.2 to 0.2 V. This supports the issue identified above regarding

triggering that needs to be solved.

- Current operational code is published at: http://mbed.org/users/nleoni/code/DirectionalMicrophone/

1 comment on =Microphone Array=:

Please log in to post comments.

From what I understand of acoustic localization, I think you have to sample the microphone inputs simultaneously to get an accurate time delay estimation. How exactly did you achieve the simultaneous sampling?