mbed library sources

Dependents: Encrypted my_mbed lklk CyaSSL_DTLS_Cellular ... more

Superseded

This library was superseded by mbed-dev - https://os.mbed.com/users/mbed_official/code/mbed-dev/.

Development branch of the mbed library sources. This library is kept in synch with the latest changes from the mbed SDK and it is not guaranteed to work.

If you are looking for a stable and tested release, please import one of the official mbed library releases:

Import librarymbed

The official Mbed 2 C/C++ SDK provides the software platform and libraries to build your applications.

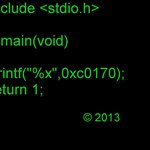

vendor/NXP/LPC4088/cmsis/core_cm4_simd.h@10:3bc89ef62ce7, 2013-06-14 (annotated)

- Committer:

- emilmont

- Date:

- Fri Jun 14 17:49:17 2013 +0100

- Revision:

- 10:3bc89ef62ce7

Unify mbed library sources

Who changed what in which revision?

| User | Revision | Line number | New contents of line |

|---|---|---|---|

| emilmont | 10:3bc89ef62ce7 | 1 | /**************************************************************************//** |

| emilmont | 10:3bc89ef62ce7 | 2 | * @file core_cm4_simd.h |

| emilmont | 10:3bc89ef62ce7 | 3 | * @brief CMSIS Cortex-M4 SIMD Header File |

| emilmont | 10:3bc89ef62ce7 | 4 | * @version V3.01 |

| emilmont | 10:3bc89ef62ce7 | 5 | * @date 06. March 2012 |

| emilmont | 10:3bc89ef62ce7 | 6 | * |

| emilmont | 10:3bc89ef62ce7 | 7 | * @note |

| emilmont | 10:3bc89ef62ce7 | 8 | * Copyright (C) 2010-2012 ARM Limited. All rights reserved. |

| emilmont | 10:3bc89ef62ce7 | 9 | * |

| emilmont | 10:3bc89ef62ce7 | 10 | * @par |

| emilmont | 10:3bc89ef62ce7 | 11 | * ARM Limited (ARM) is supplying this software for use with Cortex-M |

| emilmont | 10:3bc89ef62ce7 | 12 | * processor based microcontrollers. This file can be freely distributed |

| emilmont | 10:3bc89ef62ce7 | 13 | * within development tools that are supporting such ARM based processors. |

| emilmont | 10:3bc89ef62ce7 | 14 | * |

| emilmont | 10:3bc89ef62ce7 | 15 | * @par |

| emilmont | 10:3bc89ef62ce7 | 16 | * THIS SOFTWARE IS PROVIDED "AS IS". NO WARRANTIES, WHETHER EXPRESS, IMPLIED |

| emilmont | 10:3bc89ef62ce7 | 17 | * OR STATUTORY, INCLUDING, BUT NOT LIMITED TO, IMPLIED WARRANTIES OF |

| emilmont | 10:3bc89ef62ce7 | 18 | * MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE APPLY TO THIS SOFTWARE. |

| emilmont | 10:3bc89ef62ce7 | 19 | * ARM SHALL NOT, IN ANY CIRCUMSTANCES, BE LIABLE FOR SPECIAL, INCIDENTAL, OR |

| emilmont | 10:3bc89ef62ce7 | 20 | * CONSEQUENTIAL DAMAGES, FOR ANY REASON WHATSOEVER. |

| emilmont | 10:3bc89ef62ce7 | 21 | * |

| emilmont | 10:3bc89ef62ce7 | 22 | ******************************************************************************/ |

| emilmont | 10:3bc89ef62ce7 | 23 | |

| emilmont | 10:3bc89ef62ce7 | 24 | #ifdef __cplusplus |

| emilmont | 10:3bc89ef62ce7 | 25 | extern "C" { |

| emilmont | 10:3bc89ef62ce7 | 26 | #endif |

| emilmont | 10:3bc89ef62ce7 | 27 | |

| emilmont | 10:3bc89ef62ce7 | 28 | #ifndef __CORE_CM4_SIMD_H |

| emilmont | 10:3bc89ef62ce7 | 29 | #define __CORE_CM4_SIMD_H |

| emilmont | 10:3bc89ef62ce7 | 30 | |

| emilmont | 10:3bc89ef62ce7 | 31 | |

| emilmont | 10:3bc89ef62ce7 | 32 | /******************************************************************************* |

| emilmont | 10:3bc89ef62ce7 | 33 | * Hardware Abstraction Layer |

| emilmont | 10:3bc89ef62ce7 | 34 | ******************************************************************************/ |

| emilmont | 10:3bc89ef62ce7 | 35 | |

| emilmont | 10:3bc89ef62ce7 | 36 | |

| emilmont | 10:3bc89ef62ce7 | 37 | /* ################### Compiler specific Intrinsics ########################### */ |

| emilmont | 10:3bc89ef62ce7 | 38 | /** \defgroup CMSIS_SIMD_intrinsics CMSIS SIMD Intrinsics |

| emilmont | 10:3bc89ef62ce7 | 39 | Access to dedicated SIMD instructions |

| emilmont | 10:3bc89ef62ce7 | 40 | @{ |

| emilmont | 10:3bc89ef62ce7 | 41 | */ |

| emilmont | 10:3bc89ef62ce7 | 42 | |

| emilmont | 10:3bc89ef62ce7 | 43 | #if defined ( __CC_ARM ) /*------------------RealView Compiler -----------------*/ |

| emilmont | 10:3bc89ef62ce7 | 44 | /* ARM armcc specific functions */ |

| emilmont | 10:3bc89ef62ce7 | 45 | |

| emilmont | 10:3bc89ef62ce7 | 46 | /*------ CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 47 | #define __SADD8 __sadd8 |

| emilmont | 10:3bc89ef62ce7 | 48 | #define __QADD8 __qadd8 |

| emilmont | 10:3bc89ef62ce7 | 49 | #define __SHADD8 __shadd8 |

| emilmont | 10:3bc89ef62ce7 | 50 | #define __UADD8 __uadd8 |

| emilmont | 10:3bc89ef62ce7 | 51 | #define __UQADD8 __uqadd8 |

| emilmont | 10:3bc89ef62ce7 | 52 | #define __UHADD8 __uhadd8 |

| emilmont | 10:3bc89ef62ce7 | 53 | #define __SSUB8 __ssub8 |

| emilmont | 10:3bc89ef62ce7 | 54 | #define __QSUB8 __qsub8 |

| emilmont | 10:3bc89ef62ce7 | 55 | #define __SHSUB8 __shsub8 |

| emilmont | 10:3bc89ef62ce7 | 56 | #define __USUB8 __usub8 |

| emilmont | 10:3bc89ef62ce7 | 57 | #define __UQSUB8 __uqsub8 |

| emilmont | 10:3bc89ef62ce7 | 58 | #define __UHSUB8 __uhsub8 |

| emilmont | 10:3bc89ef62ce7 | 59 | #define __SADD16 __sadd16 |

| emilmont | 10:3bc89ef62ce7 | 60 | #define __QADD16 __qadd16 |

| emilmont | 10:3bc89ef62ce7 | 61 | #define __SHADD16 __shadd16 |

| emilmont | 10:3bc89ef62ce7 | 62 | #define __UADD16 __uadd16 |

| emilmont | 10:3bc89ef62ce7 | 63 | #define __UQADD16 __uqadd16 |

| emilmont | 10:3bc89ef62ce7 | 64 | #define __UHADD16 __uhadd16 |

| emilmont | 10:3bc89ef62ce7 | 65 | #define __SSUB16 __ssub16 |

| emilmont | 10:3bc89ef62ce7 | 66 | #define __QSUB16 __qsub16 |

| emilmont | 10:3bc89ef62ce7 | 67 | #define __SHSUB16 __shsub16 |

| emilmont | 10:3bc89ef62ce7 | 68 | #define __USUB16 __usub16 |

| emilmont | 10:3bc89ef62ce7 | 69 | #define __UQSUB16 __uqsub16 |

| emilmont | 10:3bc89ef62ce7 | 70 | #define __UHSUB16 __uhsub16 |

| emilmont | 10:3bc89ef62ce7 | 71 | #define __SASX __sasx |

| emilmont | 10:3bc89ef62ce7 | 72 | #define __QASX __qasx |

| emilmont | 10:3bc89ef62ce7 | 73 | #define __SHASX __shasx |

| emilmont | 10:3bc89ef62ce7 | 74 | #define __UASX __uasx |

| emilmont | 10:3bc89ef62ce7 | 75 | #define __UQASX __uqasx |

| emilmont | 10:3bc89ef62ce7 | 76 | #define __UHASX __uhasx |

| emilmont | 10:3bc89ef62ce7 | 77 | #define __SSAX __ssax |

| emilmont | 10:3bc89ef62ce7 | 78 | #define __QSAX __qsax |

| emilmont | 10:3bc89ef62ce7 | 79 | #define __SHSAX __shsax |

| emilmont | 10:3bc89ef62ce7 | 80 | #define __USAX __usax |

| emilmont | 10:3bc89ef62ce7 | 81 | #define __UQSAX __uqsax |

| emilmont | 10:3bc89ef62ce7 | 82 | #define __UHSAX __uhsax |

| emilmont | 10:3bc89ef62ce7 | 83 | #define __USAD8 __usad8 |

| emilmont | 10:3bc89ef62ce7 | 84 | #define __USADA8 __usada8 |

| emilmont | 10:3bc89ef62ce7 | 85 | #define __SSAT16 __ssat16 |

| emilmont | 10:3bc89ef62ce7 | 86 | #define __USAT16 __usat16 |

| emilmont | 10:3bc89ef62ce7 | 87 | #define __UXTB16 __uxtb16 |

| emilmont | 10:3bc89ef62ce7 | 88 | #define __UXTAB16 __uxtab16 |

| emilmont | 10:3bc89ef62ce7 | 89 | #define __SXTB16 __sxtb16 |

| emilmont | 10:3bc89ef62ce7 | 90 | #define __SXTAB16 __sxtab16 |

| emilmont | 10:3bc89ef62ce7 | 91 | #define __SMUAD __smuad |

| emilmont | 10:3bc89ef62ce7 | 92 | #define __SMUADX __smuadx |

| emilmont | 10:3bc89ef62ce7 | 93 | #define __SMLAD __smlad |

| emilmont | 10:3bc89ef62ce7 | 94 | #define __SMLADX __smladx |

| emilmont | 10:3bc89ef62ce7 | 95 | #define __SMLALD __smlald |

| emilmont | 10:3bc89ef62ce7 | 96 | #define __SMLALDX __smlaldx |

| emilmont | 10:3bc89ef62ce7 | 97 | #define __SMUSD __smusd |

| emilmont | 10:3bc89ef62ce7 | 98 | #define __SMUSDX __smusdx |

| emilmont | 10:3bc89ef62ce7 | 99 | #define __SMLSD __smlsd |

| emilmont | 10:3bc89ef62ce7 | 100 | #define __SMLSDX __smlsdx |

| emilmont | 10:3bc89ef62ce7 | 101 | #define __SMLSLD __smlsld |

| emilmont | 10:3bc89ef62ce7 | 102 | #define __SMLSLDX __smlsldx |

| emilmont | 10:3bc89ef62ce7 | 103 | #define __SEL __sel |

| emilmont | 10:3bc89ef62ce7 | 104 | #define __QADD __qadd |

| emilmont | 10:3bc89ef62ce7 | 105 | #define __QSUB __qsub |

| emilmont | 10:3bc89ef62ce7 | 106 | |

| emilmont | 10:3bc89ef62ce7 | 107 | #define __PKHBT(ARG1,ARG2,ARG3) ( ((((uint32_t)(ARG1)) ) & 0x0000FFFFUL) | \ |

| emilmont | 10:3bc89ef62ce7 | 108 | ((((uint32_t)(ARG2)) << (ARG3)) & 0xFFFF0000UL) ) |

| emilmont | 10:3bc89ef62ce7 | 109 | |

| emilmont | 10:3bc89ef62ce7 | 110 | #define __PKHTB(ARG1,ARG2,ARG3) ( ((((uint32_t)(ARG1)) ) & 0xFFFF0000UL) | \ |

| emilmont | 10:3bc89ef62ce7 | 111 | ((((uint32_t)(ARG2)) >> (ARG3)) & 0x0000FFFFUL) ) |

| emilmont | 10:3bc89ef62ce7 | 112 | |

| emilmont | 10:3bc89ef62ce7 | 113 | |

| emilmont | 10:3bc89ef62ce7 | 114 | /*-- End CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 115 | |

| emilmont | 10:3bc89ef62ce7 | 116 | |

| emilmont | 10:3bc89ef62ce7 | 117 | |

| emilmont | 10:3bc89ef62ce7 | 118 | #elif defined ( __ICCARM__ ) /*------------------ ICC Compiler -------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 119 | /* IAR iccarm specific functions */ |

| emilmont | 10:3bc89ef62ce7 | 120 | |

| emilmont | 10:3bc89ef62ce7 | 121 | /*------ CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 122 | #include <cmsis_iar.h> |

| emilmont | 10:3bc89ef62ce7 | 123 | |

| emilmont | 10:3bc89ef62ce7 | 124 | /*-- End CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 125 | |

| emilmont | 10:3bc89ef62ce7 | 126 | |

| emilmont | 10:3bc89ef62ce7 | 127 | |

| emilmont | 10:3bc89ef62ce7 | 128 | #elif defined ( __TMS470__ ) /*---------------- TI CCS Compiler ------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 129 | /* TI CCS specific functions */ |

| emilmont | 10:3bc89ef62ce7 | 130 | |

| emilmont | 10:3bc89ef62ce7 | 131 | /*------ CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 132 | #include <cmsis_ccs.h> |

| emilmont | 10:3bc89ef62ce7 | 133 | |

| emilmont | 10:3bc89ef62ce7 | 134 | /*-- End CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 135 | |

| emilmont | 10:3bc89ef62ce7 | 136 | |

| emilmont | 10:3bc89ef62ce7 | 137 | |

| emilmont | 10:3bc89ef62ce7 | 138 | #elif defined ( __GNUC__ ) /*------------------ GNU Compiler ---------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 139 | /* GNU gcc specific functions */ |

| emilmont | 10:3bc89ef62ce7 | 140 | |

| emilmont | 10:3bc89ef62ce7 | 141 | /*------ CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 142 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SADD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 143 | { |

| emilmont | 10:3bc89ef62ce7 | 144 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 145 | |

| emilmont | 10:3bc89ef62ce7 | 146 | __ASM volatile ("sadd8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 147 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 148 | } |

| emilmont | 10:3bc89ef62ce7 | 149 | |

| emilmont | 10:3bc89ef62ce7 | 150 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QADD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 151 | { |

| emilmont | 10:3bc89ef62ce7 | 152 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 153 | |

| emilmont | 10:3bc89ef62ce7 | 154 | __ASM volatile ("qadd8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 155 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 156 | } |

| emilmont | 10:3bc89ef62ce7 | 157 | |

| emilmont | 10:3bc89ef62ce7 | 158 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SHADD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 159 | { |

| emilmont | 10:3bc89ef62ce7 | 160 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 161 | |

| emilmont | 10:3bc89ef62ce7 | 162 | __ASM volatile ("shadd8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 163 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 164 | } |

| emilmont | 10:3bc89ef62ce7 | 165 | |

| emilmont | 10:3bc89ef62ce7 | 166 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UADD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 167 | { |

| emilmont | 10:3bc89ef62ce7 | 168 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 169 | |

| emilmont | 10:3bc89ef62ce7 | 170 | __ASM volatile ("uadd8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 171 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 172 | } |

| emilmont | 10:3bc89ef62ce7 | 173 | |

| emilmont | 10:3bc89ef62ce7 | 174 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UQADD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 175 | { |

| emilmont | 10:3bc89ef62ce7 | 176 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 177 | |

| emilmont | 10:3bc89ef62ce7 | 178 | __ASM volatile ("uqadd8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 179 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 180 | } |

| emilmont | 10:3bc89ef62ce7 | 181 | |

| emilmont | 10:3bc89ef62ce7 | 182 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UHADD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 183 | { |

| emilmont | 10:3bc89ef62ce7 | 184 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 185 | |

| emilmont | 10:3bc89ef62ce7 | 186 | __ASM volatile ("uhadd8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 187 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 188 | } |

| emilmont | 10:3bc89ef62ce7 | 189 | |

| emilmont | 10:3bc89ef62ce7 | 190 | |

| emilmont | 10:3bc89ef62ce7 | 191 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SSUB8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 192 | { |

| emilmont | 10:3bc89ef62ce7 | 193 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 194 | |

| emilmont | 10:3bc89ef62ce7 | 195 | __ASM volatile ("ssub8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 196 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 197 | } |

| emilmont | 10:3bc89ef62ce7 | 198 | |

| emilmont | 10:3bc89ef62ce7 | 199 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QSUB8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 200 | { |

| emilmont | 10:3bc89ef62ce7 | 201 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 202 | |

| emilmont | 10:3bc89ef62ce7 | 203 | __ASM volatile ("qsub8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 204 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 205 | } |

| emilmont | 10:3bc89ef62ce7 | 206 | |

| emilmont | 10:3bc89ef62ce7 | 207 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SHSUB8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 208 | { |

| emilmont | 10:3bc89ef62ce7 | 209 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 210 | |

| emilmont | 10:3bc89ef62ce7 | 211 | __ASM volatile ("shsub8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 212 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 213 | } |

| emilmont | 10:3bc89ef62ce7 | 214 | |

| emilmont | 10:3bc89ef62ce7 | 215 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __USUB8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 216 | { |

| emilmont | 10:3bc89ef62ce7 | 217 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 218 | |

| emilmont | 10:3bc89ef62ce7 | 219 | __ASM volatile ("usub8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 220 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 221 | } |

| emilmont | 10:3bc89ef62ce7 | 222 | |

| emilmont | 10:3bc89ef62ce7 | 223 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UQSUB8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 224 | { |

| emilmont | 10:3bc89ef62ce7 | 225 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 226 | |

| emilmont | 10:3bc89ef62ce7 | 227 | __ASM volatile ("uqsub8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 228 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 229 | } |

| emilmont | 10:3bc89ef62ce7 | 230 | |

| emilmont | 10:3bc89ef62ce7 | 231 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UHSUB8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 232 | { |

| emilmont | 10:3bc89ef62ce7 | 233 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 234 | |

| emilmont | 10:3bc89ef62ce7 | 235 | __ASM volatile ("uhsub8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 236 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 237 | } |

| emilmont | 10:3bc89ef62ce7 | 238 | |

| emilmont | 10:3bc89ef62ce7 | 239 | |

| emilmont | 10:3bc89ef62ce7 | 240 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SADD16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 241 | { |

| emilmont | 10:3bc89ef62ce7 | 242 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 243 | |

| emilmont | 10:3bc89ef62ce7 | 244 | __ASM volatile ("sadd16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 245 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 246 | } |

| emilmont | 10:3bc89ef62ce7 | 247 | |

| emilmont | 10:3bc89ef62ce7 | 248 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QADD16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 249 | { |

| emilmont | 10:3bc89ef62ce7 | 250 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 251 | |

| emilmont | 10:3bc89ef62ce7 | 252 | __ASM volatile ("qadd16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 253 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 254 | } |

| emilmont | 10:3bc89ef62ce7 | 255 | |

| emilmont | 10:3bc89ef62ce7 | 256 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SHADD16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 257 | { |

| emilmont | 10:3bc89ef62ce7 | 258 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 259 | |

| emilmont | 10:3bc89ef62ce7 | 260 | __ASM volatile ("shadd16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 261 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 262 | } |

| emilmont | 10:3bc89ef62ce7 | 263 | |

| emilmont | 10:3bc89ef62ce7 | 264 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UADD16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 265 | { |

| emilmont | 10:3bc89ef62ce7 | 266 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 267 | |

| emilmont | 10:3bc89ef62ce7 | 268 | __ASM volatile ("uadd16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 269 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 270 | } |

| emilmont | 10:3bc89ef62ce7 | 271 | |

| emilmont | 10:3bc89ef62ce7 | 272 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UQADD16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 273 | { |

| emilmont | 10:3bc89ef62ce7 | 274 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 275 | |

| emilmont | 10:3bc89ef62ce7 | 276 | __ASM volatile ("uqadd16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 277 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 278 | } |

| emilmont | 10:3bc89ef62ce7 | 279 | |

| emilmont | 10:3bc89ef62ce7 | 280 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UHADD16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 281 | { |

| emilmont | 10:3bc89ef62ce7 | 282 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 283 | |

| emilmont | 10:3bc89ef62ce7 | 284 | __ASM volatile ("uhadd16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 285 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 286 | } |

| emilmont | 10:3bc89ef62ce7 | 287 | |

| emilmont | 10:3bc89ef62ce7 | 288 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SSUB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 289 | { |

| emilmont | 10:3bc89ef62ce7 | 290 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 291 | |

| emilmont | 10:3bc89ef62ce7 | 292 | __ASM volatile ("ssub16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 293 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 294 | } |

| emilmont | 10:3bc89ef62ce7 | 295 | |

| emilmont | 10:3bc89ef62ce7 | 296 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QSUB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 297 | { |

| emilmont | 10:3bc89ef62ce7 | 298 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 299 | |

| emilmont | 10:3bc89ef62ce7 | 300 | __ASM volatile ("qsub16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 301 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 302 | } |

| emilmont | 10:3bc89ef62ce7 | 303 | |

| emilmont | 10:3bc89ef62ce7 | 304 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SHSUB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 305 | { |

| emilmont | 10:3bc89ef62ce7 | 306 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 307 | |

| emilmont | 10:3bc89ef62ce7 | 308 | __ASM volatile ("shsub16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 309 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 310 | } |

| emilmont | 10:3bc89ef62ce7 | 311 | |

| emilmont | 10:3bc89ef62ce7 | 312 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __USUB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 313 | { |

| emilmont | 10:3bc89ef62ce7 | 314 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 315 | |

| emilmont | 10:3bc89ef62ce7 | 316 | __ASM volatile ("usub16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 317 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 318 | } |

| emilmont | 10:3bc89ef62ce7 | 319 | |

| emilmont | 10:3bc89ef62ce7 | 320 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UQSUB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 321 | { |

| emilmont | 10:3bc89ef62ce7 | 322 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 323 | |

| emilmont | 10:3bc89ef62ce7 | 324 | __ASM volatile ("uqsub16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 325 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 326 | } |

| emilmont | 10:3bc89ef62ce7 | 327 | |

| emilmont | 10:3bc89ef62ce7 | 328 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UHSUB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 329 | { |

| emilmont | 10:3bc89ef62ce7 | 330 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 331 | |

| emilmont | 10:3bc89ef62ce7 | 332 | __ASM volatile ("uhsub16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 333 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 334 | } |

| emilmont | 10:3bc89ef62ce7 | 335 | |

| emilmont | 10:3bc89ef62ce7 | 336 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SASX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 337 | { |

| emilmont | 10:3bc89ef62ce7 | 338 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 339 | |

| emilmont | 10:3bc89ef62ce7 | 340 | __ASM volatile ("sasx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 341 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 342 | } |

| emilmont | 10:3bc89ef62ce7 | 343 | |

| emilmont | 10:3bc89ef62ce7 | 344 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QASX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 345 | { |

| emilmont | 10:3bc89ef62ce7 | 346 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 347 | |

| emilmont | 10:3bc89ef62ce7 | 348 | __ASM volatile ("qasx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 349 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 350 | } |

| emilmont | 10:3bc89ef62ce7 | 351 | |

| emilmont | 10:3bc89ef62ce7 | 352 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SHASX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 353 | { |

| emilmont | 10:3bc89ef62ce7 | 354 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 355 | |

| emilmont | 10:3bc89ef62ce7 | 356 | __ASM volatile ("shasx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 357 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 358 | } |

| emilmont | 10:3bc89ef62ce7 | 359 | |

| emilmont | 10:3bc89ef62ce7 | 360 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UASX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 361 | { |

| emilmont | 10:3bc89ef62ce7 | 362 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 363 | |

| emilmont | 10:3bc89ef62ce7 | 364 | __ASM volatile ("uasx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 365 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 366 | } |

| emilmont | 10:3bc89ef62ce7 | 367 | |

| emilmont | 10:3bc89ef62ce7 | 368 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UQASX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 369 | { |

| emilmont | 10:3bc89ef62ce7 | 370 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 371 | |

| emilmont | 10:3bc89ef62ce7 | 372 | __ASM volatile ("uqasx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 373 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 374 | } |

| emilmont | 10:3bc89ef62ce7 | 375 | |

| emilmont | 10:3bc89ef62ce7 | 376 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UHASX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 377 | { |

| emilmont | 10:3bc89ef62ce7 | 378 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 379 | |

| emilmont | 10:3bc89ef62ce7 | 380 | __ASM volatile ("uhasx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 381 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 382 | } |

| emilmont | 10:3bc89ef62ce7 | 383 | |

| emilmont | 10:3bc89ef62ce7 | 384 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SSAX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 385 | { |

| emilmont | 10:3bc89ef62ce7 | 386 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 387 | |

| emilmont | 10:3bc89ef62ce7 | 388 | __ASM volatile ("ssax %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 389 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 390 | } |

| emilmont | 10:3bc89ef62ce7 | 391 | |

| emilmont | 10:3bc89ef62ce7 | 392 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QSAX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 393 | { |

| emilmont | 10:3bc89ef62ce7 | 394 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 395 | |

| emilmont | 10:3bc89ef62ce7 | 396 | __ASM volatile ("qsax %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 397 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 398 | } |

| emilmont | 10:3bc89ef62ce7 | 399 | |

| emilmont | 10:3bc89ef62ce7 | 400 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SHSAX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 401 | { |

| emilmont | 10:3bc89ef62ce7 | 402 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 403 | |

| emilmont | 10:3bc89ef62ce7 | 404 | __ASM volatile ("shsax %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 405 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 406 | } |

| emilmont | 10:3bc89ef62ce7 | 407 | |

| emilmont | 10:3bc89ef62ce7 | 408 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __USAX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 409 | { |

| emilmont | 10:3bc89ef62ce7 | 410 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 411 | |

| emilmont | 10:3bc89ef62ce7 | 412 | __ASM volatile ("usax %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 413 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 414 | } |

| emilmont | 10:3bc89ef62ce7 | 415 | |

| emilmont | 10:3bc89ef62ce7 | 416 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UQSAX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 417 | { |

| emilmont | 10:3bc89ef62ce7 | 418 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 419 | |

| emilmont | 10:3bc89ef62ce7 | 420 | __ASM volatile ("uqsax %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 421 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 422 | } |

| emilmont | 10:3bc89ef62ce7 | 423 | |

| emilmont | 10:3bc89ef62ce7 | 424 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UHSAX(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 425 | { |

| emilmont | 10:3bc89ef62ce7 | 426 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 427 | |

| emilmont | 10:3bc89ef62ce7 | 428 | __ASM volatile ("uhsax %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 429 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 430 | } |

| emilmont | 10:3bc89ef62ce7 | 431 | |

| emilmont | 10:3bc89ef62ce7 | 432 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __USAD8(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 433 | { |

| emilmont | 10:3bc89ef62ce7 | 434 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 435 | |

| emilmont | 10:3bc89ef62ce7 | 436 | __ASM volatile ("usad8 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 437 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 438 | } |

| emilmont | 10:3bc89ef62ce7 | 439 | |

| emilmont | 10:3bc89ef62ce7 | 440 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __USADA8(uint32_t op1, uint32_t op2, uint32_t op3) |

| emilmont | 10:3bc89ef62ce7 | 441 | { |

| emilmont | 10:3bc89ef62ce7 | 442 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 443 | |

| emilmont | 10:3bc89ef62ce7 | 444 | __ASM volatile ("usada8 %0, %1, %2, %3" : "=r" (result) : "r" (op1), "r" (op2), "r" (op3) ); |

| emilmont | 10:3bc89ef62ce7 | 445 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 446 | } |

| emilmont | 10:3bc89ef62ce7 | 447 | |

| emilmont | 10:3bc89ef62ce7 | 448 | #define __SSAT16(ARG1,ARG2) \ |

| emilmont | 10:3bc89ef62ce7 | 449 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 450 | uint32_t __RES, __ARG1 = (ARG1); \ |

| emilmont | 10:3bc89ef62ce7 | 451 | __ASM ("ssat16 %0, %1, %2" : "=r" (__RES) : "I" (ARG2), "r" (__ARG1) ); \ |

| emilmont | 10:3bc89ef62ce7 | 452 | __RES; \ |

| emilmont | 10:3bc89ef62ce7 | 453 | }) |

| emilmont | 10:3bc89ef62ce7 | 454 | |

| emilmont | 10:3bc89ef62ce7 | 455 | #define __USAT16(ARG1,ARG2) \ |

| emilmont | 10:3bc89ef62ce7 | 456 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 457 | uint32_t __RES, __ARG1 = (ARG1); \ |

| emilmont | 10:3bc89ef62ce7 | 458 | __ASM ("usat16 %0, %1, %2" : "=r" (__RES) : "I" (ARG2), "r" (__ARG1) ); \ |

| emilmont | 10:3bc89ef62ce7 | 459 | __RES; \ |

| emilmont | 10:3bc89ef62ce7 | 460 | }) |

| emilmont | 10:3bc89ef62ce7 | 461 | |

| emilmont | 10:3bc89ef62ce7 | 462 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UXTB16(uint32_t op1) |

| emilmont | 10:3bc89ef62ce7 | 463 | { |

| emilmont | 10:3bc89ef62ce7 | 464 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 465 | |

| emilmont | 10:3bc89ef62ce7 | 466 | __ASM volatile ("uxtb16 %0, %1" : "=r" (result) : "r" (op1)); |

| emilmont | 10:3bc89ef62ce7 | 467 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 468 | } |

| emilmont | 10:3bc89ef62ce7 | 469 | |

| emilmont | 10:3bc89ef62ce7 | 470 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __UXTAB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 471 | { |

| emilmont | 10:3bc89ef62ce7 | 472 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 473 | |

| emilmont | 10:3bc89ef62ce7 | 474 | __ASM volatile ("uxtab16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 475 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 476 | } |

| emilmont | 10:3bc89ef62ce7 | 477 | |

| emilmont | 10:3bc89ef62ce7 | 478 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SXTB16(uint32_t op1) |

| emilmont | 10:3bc89ef62ce7 | 479 | { |

| emilmont | 10:3bc89ef62ce7 | 480 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 481 | |

| emilmont | 10:3bc89ef62ce7 | 482 | __ASM volatile ("sxtb16 %0, %1" : "=r" (result) : "r" (op1)); |

| emilmont | 10:3bc89ef62ce7 | 483 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 484 | } |

| emilmont | 10:3bc89ef62ce7 | 485 | |

| emilmont | 10:3bc89ef62ce7 | 486 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SXTAB16(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 487 | { |

| emilmont | 10:3bc89ef62ce7 | 488 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 489 | |

| emilmont | 10:3bc89ef62ce7 | 490 | __ASM volatile ("sxtab16 %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 491 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 492 | } |

| emilmont | 10:3bc89ef62ce7 | 493 | |

| emilmont | 10:3bc89ef62ce7 | 494 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMUAD (uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 495 | { |

| emilmont | 10:3bc89ef62ce7 | 496 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 497 | |

| emilmont | 10:3bc89ef62ce7 | 498 | __ASM volatile ("smuad %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 499 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 500 | } |

| emilmont | 10:3bc89ef62ce7 | 501 | |

| emilmont | 10:3bc89ef62ce7 | 502 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMUADX (uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 503 | { |

| emilmont | 10:3bc89ef62ce7 | 504 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 505 | |

| emilmont | 10:3bc89ef62ce7 | 506 | __ASM volatile ("smuadx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 507 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 508 | } |

| emilmont | 10:3bc89ef62ce7 | 509 | |

| emilmont | 10:3bc89ef62ce7 | 510 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMLAD (uint32_t op1, uint32_t op2, uint32_t op3) |

| emilmont | 10:3bc89ef62ce7 | 511 | { |

| emilmont | 10:3bc89ef62ce7 | 512 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 513 | |

| emilmont | 10:3bc89ef62ce7 | 514 | __ASM volatile ("smlad %0, %1, %2, %3" : "=r" (result) : "r" (op1), "r" (op2), "r" (op3) ); |

| emilmont | 10:3bc89ef62ce7 | 515 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 516 | } |

| emilmont | 10:3bc89ef62ce7 | 517 | |

| emilmont | 10:3bc89ef62ce7 | 518 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMLADX (uint32_t op1, uint32_t op2, uint32_t op3) |

| emilmont | 10:3bc89ef62ce7 | 519 | { |

| emilmont | 10:3bc89ef62ce7 | 520 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 521 | |

| emilmont | 10:3bc89ef62ce7 | 522 | __ASM volatile ("smladx %0, %1, %2, %3" : "=r" (result) : "r" (op1), "r" (op2), "r" (op3) ); |

| emilmont | 10:3bc89ef62ce7 | 523 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 524 | } |

| emilmont | 10:3bc89ef62ce7 | 525 | |

| emilmont | 10:3bc89ef62ce7 | 526 | #define __SMLALD(ARG1,ARG2,ARG3) \ |

| emilmont | 10:3bc89ef62ce7 | 527 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 528 | uint32_t __ARG1 = (ARG1), __ARG2 = (ARG2), __ARG3_H = (uint32_t)((uint64_t)(ARG3) >> 32), __ARG3_L = (uint32_t)((uint64_t)(ARG3) & 0xFFFFFFFFUL); \ |

| emilmont | 10:3bc89ef62ce7 | 529 | __ASM volatile ("smlald %0, %1, %2, %3" : "=r" (__ARG3_L), "=r" (__ARG3_H) : "r" (__ARG1), "r" (__ARG2), "0" (__ARG3_L), "1" (__ARG3_H) ); \ |

| emilmont | 10:3bc89ef62ce7 | 530 | (uint64_t)(((uint64_t)__ARG3_H << 32) | __ARG3_L); \ |

| emilmont | 10:3bc89ef62ce7 | 531 | }) |

| emilmont | 10:3bc89ef62ce7 | 532 | |

| emilmont | 10:3bc89ef62ce7 | 533 | #define __SMLALDX(ARG1,ARG2,ARG3) \ |

| emilmont | 10:3bc89ef62ce7 | 534 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 535 | uint32_t __ARG1 = (ARG1), __ARG2 = (ARG2), __ARG3_H = (uint32_t)((uint64_t)(ARG3) >> 32), __ARG3_L = (uint32_t)((uint64_t)(ARG3) & 0xFFFFFFFFUL); \ |

| emilmont | 10:3bc89ef62ce7 | 536 | __ASM volatile ("smlaldx %0, %1, %2, %3" : "=r" (__ARG3_L), "=r" (__ARG3_H) : "r" (__ARG1), "r" (__ARG2), "0" (__ARG3_L), "1" (__ARG3_H) ); \ |

| emilmont | 10:3bc89ef62ce7 | 537 | (uint64_t)(((uint64_t)__ARG3_H << 32) | __ARG3_L); \ |

| emilmont | 10:3bc89ef62ce7 | 538 | }) |

| emilmont | 10:3bc89ef62ce7 | 539 | |

| emilmont | 10:3bc89ef62ce7 | 540 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMUSD (uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 541 | { |

| emilmont | 10:3bc89ef62ce7 | 542 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 543 | |

| emilmont | 10:3bc89ef62ce7 | 544 | __ASM volatile ("smusd %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 545 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 546 | } |

| emilmont | 10:3bc89ef62ce7 | 547 | |

| emilmont | 10:3bc89ef62ce7 | 548 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMUSDX (uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 549 | { |

| emilmont | 10:3bc89ef62ce7 | 550 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 551 | |

| emilmont | 10:3bc89ef62ce7 | 552 | __ASM volatile ("smusdx %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 553 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 554 | } |

| emilmont | 10:3bc89ef62ce7 | 555 | |

| emilmont | 10:3bc89ef62ce7 | 556 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMLSD (uint32_t op1, uint32_t op2, uint32_t op3) |

| emilmont | 10:3bc89ef62ce7 | 557 | { |

| emilmont | 10:3bc89ef62ce7 | 558 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 559 | |

| emilmont | 10:3bc89ef62ce7 | 560 | __ASM volatile ("smlsd %0, %1, %2, %3" : "=r" (result) : "r" (op1), "r" (op2), "r" (op3) ); |

| emilmont | 10:3bc89ef62ce7 | 561 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 562 | } |

| emilmont | 10:3bc89ef62ce7 | 563 | |

| emilmont | 10:3bc89ef62ce7 | 564 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SMLSDX (uint32_t op1, uint32_t op2, uint32_t op3) |

| emilmont | 10:3bc89ef62ce7 | 565 | { |

| emilmont | 10:3bc89ef62ce7 | 566 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 567 | |

| emilmont | 10:3bc89ef62ce7 | 568 | __ASM volatile ("smlsdx %0, %1, %2, %3" : "=r" (result) : "r" (op1), "r" (op2), "r" (op3) ); |

| emilmont | 10:3bc89ef62ce7 | 569 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 570 | } |

| emilmont | 10:3bc89ef62ce7 | 571 | |

| emilmont | 10:3bc89ef62ce7 | 572 | #define __SMLSLD(ARG1,ARG2,ARG3) \ |

| emilmont | 10:3bc89ef62ce7 | 573 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 574 | uint32_t __ARG1 = (ARG1), __ARG2 = (ARG2), __ARG3_H = (uint32_t)((ARG3) >> 32), __ARG3_L = (uint32_t)((ARG3) & 0xFFFFFFFFUL); \ |

| emilmont | 10:3bc89ef62ce7 | 575 | __ASM volatile ("smlsld %0, %1, %2, %3" : "=r" (__ARG3_L), "=r" (__ARG3_H) : "r" (__ARG1), "r" (__ARG2), "0" (__ARG3_L), "1" (__ARG3_H) ); \ |

| emilmont | 10:3bc89ef62ce7 | 576 | (uint64_t)(((uint64_t)__ARG3_H << 32) | __ARG3_L); \ |

| emilmont | 10:3bc89ef62ce7 | 577 | }) |

| emilmont | 10:3bc89ef62ce7 | 578 | |

| emilmont | 10:3bc89ef62ce7 | 579 | #define __SMLSLDX(ARG1,ARG2,ARG3) \ |

| emilmont | 10:3bc89ef62ce7 | 580 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 581 | uint32_t __ARG1 = (ARG1), __ARG2 = (ARG2), __ARG3_H = (uint32_t)((ARG3) >> 32), __ARG3_L = (uint32_t)((ARG3) & 0xFFFFFFFFUL); \ |

| emilmont | 10:3bc89ef62ce7 | 582 | __ASM volatile ("smlsldx %0, %1, %2, %3" : "=r" (__ARG3_L), "=r" (__ARG3_H) : "r" (__ARG1), "r" (__ARG2), "0" (__ARG3_L), "1" (__ARG3_H) ); \ |

| emilmont | 10:3bc89ef62ce7 | 583 | (uint64_t)(((uint64_t)__ARG3_H << 32) | __ARG3_L); \ |

| emilmont | 10:3bc89ef62ce7 | 584 | }) |

| emilmont | 10:3bc89ef62ce7 | 585 | |

| emilmont | 10:3bc89ef62ce7 | 586 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __SEL (uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 587 | { |

| emilmont | 10:3bc89ef62ce7 | 588 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 589 | |

| emilmont | 10:3bc89ef62ce7 | 590 | __ASM volatile ("sel %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 591 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 592 | } |

| emilmont | 10:3bc89ef62ce7 | 593 | |

| emilmont | 10:3bc89ef62ce7 | 594 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QADD(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 595 | { |

| emilmont | 10:3bc89ef62ce7 | 596 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 597 | |

| emilmont | 10:3bc89ef62ce7 | 598 | __ASM volatile ("qadd %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 599 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 600 | } |

| emilmont | 10:3bc89ef62ce7 | 601 | |

| emilmont | 10:3bc89ef62ce7 | 602 | __attribute__( ( always_inline ) ) __STATIC_INLINE uint32_t __QSUB(uint32_t op1, uint32_t op2) |

| emilmont | 10:3bc89ef62ce7 | 603 | { |

| emilmont | 10:3bc89ef62ce7 | 604 | uint32_t result; |

| emilmont | 10:3bc89ef62ce7 | 605 | |

| emilmont | 10:3bc89ef62ce7 | 606 | __ASM volatile ("qsub %0, %1, %2" : "=r" (result) : "r" (op1), "r" (op2) ); |

| emilmont | 10:3bc89ef62ce7 | 607 | return(result); |

| emilmont | 10:3bc89ef62ce7 | 608 | } |

| emilmont | 10:3bc89ef62ce7 | 609 | |

| emilmont | 10:3bc89ef62ce7 | 610 | #define __PKHBT(ARG1,ARG2,ARG3) \ |

| emilmont | 10:3bc89ef62ce7 | 611 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 612 | uint32_t __RES, __ARG1 = (ARG1), __ARG2 = (ARG2); \ |

| emilmont | 10:3bc89ef62ce7 | 613 | __ASM ("pkhbt %0, %1, %2, lsl %3" : "=r" (__RES) : "r" (__ARG1), "r" (__ARG2), "I" (ARG3) ); \ |

| emilmont | 10:3bc89ef62ce7 | 614 | __RES; \ |

| emilmont | 10:3bc89ef62ce7 | 615 | }) |

| emilmont | 10:3bc89ef62ce7 | 616 | |

| emilmont | 10:3bc89ef62ce7 | 617 | #define __PKHTB(ARG1,ARG2,ARG3) \ |

| emilmont | 10:3bc89ef62ce7 | 618 | ({ \ |

| emilmont | 10:3bc89ef62ce7 | 619 | uint32_t __RES, __ARG1 = (ARG1), __ARG2 = (ARG2); \ |

| emilmont | 10:3bc89ef62ce7 | 620 | if (ARG3 == 0) \ |

| emilmont | 10:3bc89ef62ce7 | 621 | __ASM ("pkhtb %0, %1, %2" : "=r" (__RES) : "r" (__ARG1), "r" (__ARG2) ); \ |

| emilmont | 10:3bc89ef62ce7 | 622 | else \ |

| emilmont | 10:3bc89ef62ce7 | 623 | __ASM ("pkhtb %0, %1, %2, asr %3" : "=r" (__RES) : "r" (__ARG1), "r" (__ARG2), "I" (ARG3) ); \ |

| emilmont | 10:3bc89ef62ce7 | 624 | __RES; \ |

| emilmont | 10:3bc89ef62ce7 | 625 | }) |

| emilmont | 10:3bc89ef62ce7 | 626 | |

| emilmont | 10:3bc89ef62ce7 | 627 | /*-- End CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 628 | |

| emilmont | 10:3bc89ef62ce7 | 629 | |

| emilmont | 10:3bc89ef62ce7 | 630 | |

| emilmont | 10:3bc89ef62ce7 | 631 | #elif defined ( __TASKING__ ) /*------------------ TASKING Compiler --------------*/ |

| emilmont | 10:3bc89ef62ce7 | 632 | /* TASKING carm specific functions */ |

| emilmont | 10:3bc89ef62ce7 | 633 | |

| emilmont | 10:3bc89ef62ce7 | 634 | |

| emilmont | 10:3bc89ef62ce7 | 635 | /*------ CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 636 | /* not yet supported */ |

| emilmont | 10:3bc89ef62ce7 | 637 | /*-- End CM4 SIMD Intrinsics -----------------------------------------------------*/ |

| emilmont | 10:3bc89ef62ce7 | 638 | |

| emilmont | 10:3bc89ef62ce7 | 639 | |

| emilmont | 10:3bc89ef62ce7 | 640 | #endif |

| emilmont | 10:3bc89ef62ce7 | 641 | |

| emilmont | 10:3bc89ef62ce7 | 642 | /*@} end of group CMSIS_SIMD_intrinsics */ |

| emilmont | 10:3bc89ef62ce7 | 643 | |

| emilmont | 10:3bc89ef62ce7 | 644 | |

| emilmont | 10:3bc89ef62ce7 | 645 | #endif /* __CORE_CM4_SIMD_H */ |

| emilmont | 10:3bc89ef62ce7 | 646 | |

| emilmont | 10:3bc89ef62ce7 | 647 | #ifdef __cplusplus |

| emilmont | 10:3bc89ef62ce7 | 648 | } |

| emilmont | 10:3bc89ef62ce7 | 649 | #endif |

mbed official

mbed official